SO3. Develop a cognitive mission (re)planner which functions based on interpreted diver gestures that make more complex words

SO3.a. Develop an interpreter of a symbolic language consisting of common diver hand symbols and a specific set of gestures.

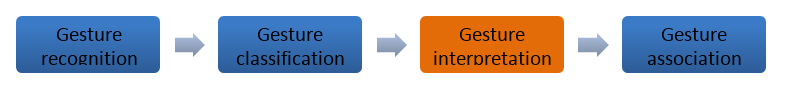

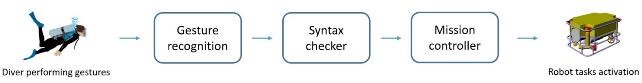

This objective refers to the integration of the different modules for the mission generation based on diver gestures. This encompasses the following tasks: gesture recognition, identification of valid phrases (parsing), generation of missions and feedback delivery to the diver via a tablet. The main purpose of the integration is to build a fault tolerant system against human errors (gestures badly performed or with no correct syntactical structure) and software errors (misclassification of gestures).

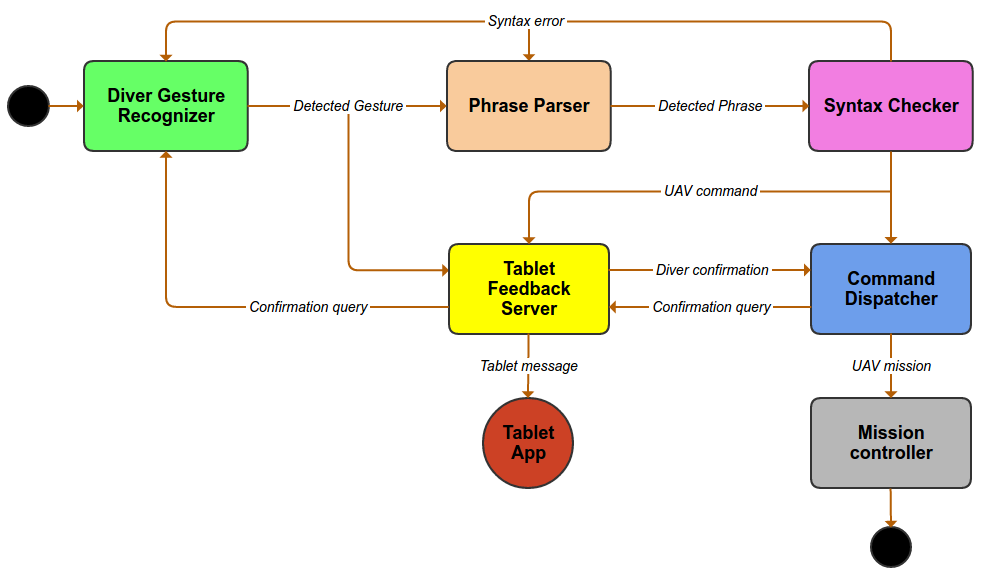

Fig. SO3.1. Tasks of the symbolic language interpreter

|

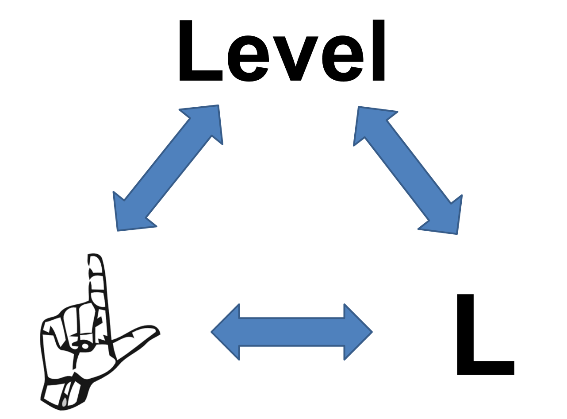

Fig. SO3.2. Gestures, written language and semantics are mapped by a bijective function

|

Within this background a language of communication between the diver and the robot based on gestures, called CADDIAN, has been developed. The language is based partly on the consolidated and standardized diver gestures. The choice of rendering CADDIAN, to all intents and purposes, backward compatible with the current method of communication used by divers, has been made in the hope of fostering its adoption among communities of divers. Gestures have been mapped with easily writable symbols such as the letters of the Latin alphabet: both of them (i.e. gestures and written form) are also mapped to a semantic function that translates them into commands / messages (see Fig. SO3.2).

CADDIAN is a language for communication between the diver and the robot, therefore the list of messages/commands identified is strictly context dependent. The first step of its creation has been the definition of a list of commands/messages to be issued to the AUV: currently the number of messages is around fifty-two units. The commands/messages are divided into seven groups: Problems (8), Movement (at least 13), Setting variables (10), Interrupt (4), Feedback (3), Works/tasks (at least 14) and Slang, which is a special set (see Deliverable 3.1 for more information). The Slang group has been introduced in the second version of CADDIAN for a better divers’ acceptance: the Slang group is a subset, which is an intersection of the previous groups.

The grammar of the language is a context-free grammar and syntax through BNF productions has been given (see Deliverable 3.1). By applying the syntax to messages/ commands the CADDIAN written forms can be obtained.

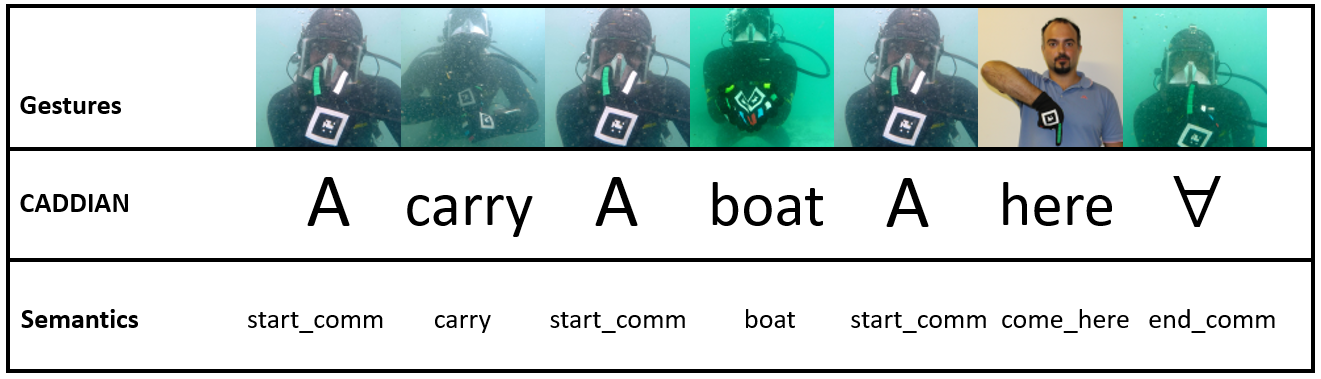

Fig. SO3.3. By applying the CADDIAN syntax, messages/commands can be transmitted to AUV.

A CADDIAN dictionary has been made for quick referencing (see Deliverable 3.1).

After defining the commands, a communication protocol with error handling has been developed which ensures a strict cooperation between the diver and the robot. Thanks to this protocol, the diver is able to know the progress of a task/mission that has been requested to the vehicle and to understand whether it has been completed, while the robot can show the diver which CADDIAN message it understood during the communication.

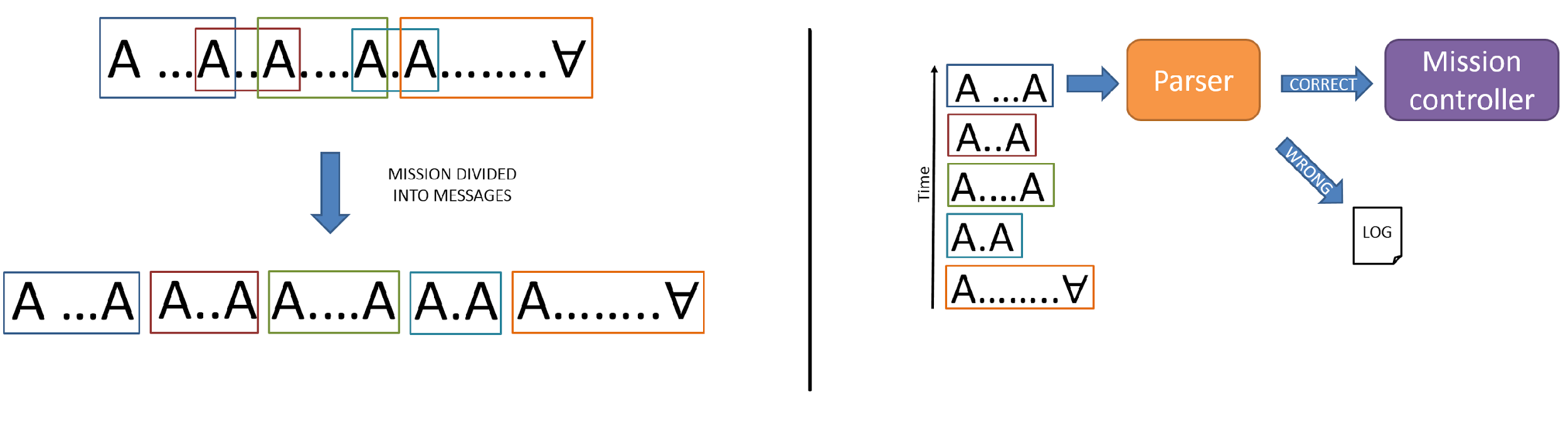

The gesture sequence interpretation is implemented through a parser (Syntax Checker) that accepts syntactically correct sequences and reject the wrong ones. The parser accepts commands or messages, which are, to all intents and purposes, sequences of gestures. Each gesture can be represented by a symbol. In this way, a message/command is a sequence of symbols/gestures delimited, at the beginning, by a symbol of “Start communication” and, at the end, by the same symbol or by a symbol of “End of communication” (see Fig. SO3.4.). According to which symbol has been found after the initial one (i.e. “Start communication”), the Syntax Checker goes on by applying the CADDIAN syntax rules.

Fig. SO3.4. on the LEFT Mission segmentation into single messages; on the RIGHT Single messages processed by the parser

Missions (a sequence of commands/messages, see “Deliverable 3.1”) are segmented into messages and the Syntax Checker parses each message. Then, it can accept the sequence that is to all effect, because of the acceptance, a command and the command is passed to the mission controller. In case the sequence is wrong, the error is logged and a warning is issued to the diver.

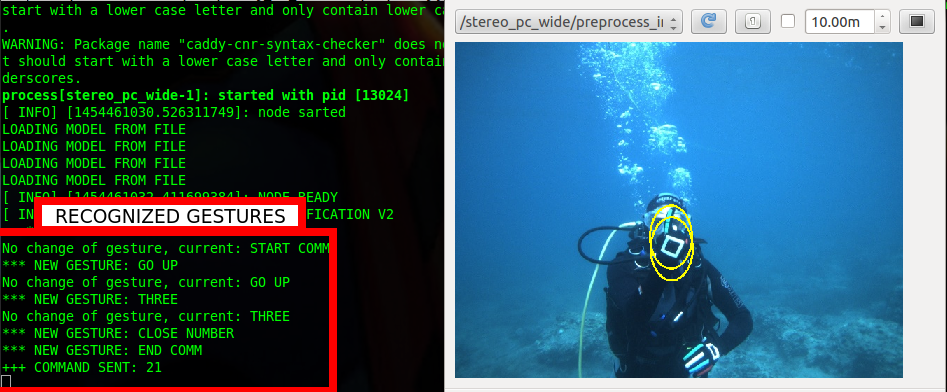

The Syntax Checker has been realized as a ROS node that checks and validates each command before allowing the robot to execute it. Fig. SO3.5. shows a screenshot of the classification system, on the left hand side a list (history) of the detected gestures can be seen and on the right side the monocular image with the detected gesture. If the list of gestures obey the rules previously described (i.e. it is approved by the syntax checker), then and only then the command code is sent to the AUV. In this case, a “Start communication” signal was received, then “Up” a Direction symbol, a “Number”, “Number Delimiter” and “End communication.”

Fig. SO3.5. Classification system detecting hands and labelling individual gestures. If a “Start” and “End” communication gestures is received, the in-between symbols are checked by the syntax checker and sent to the UAV if correct.

Fig. SO3.6. Diagram of the ROS software nodes and how they communicate each other during a mission generated through diver gestures. On the bottom the name of each ROS topic is displayed.

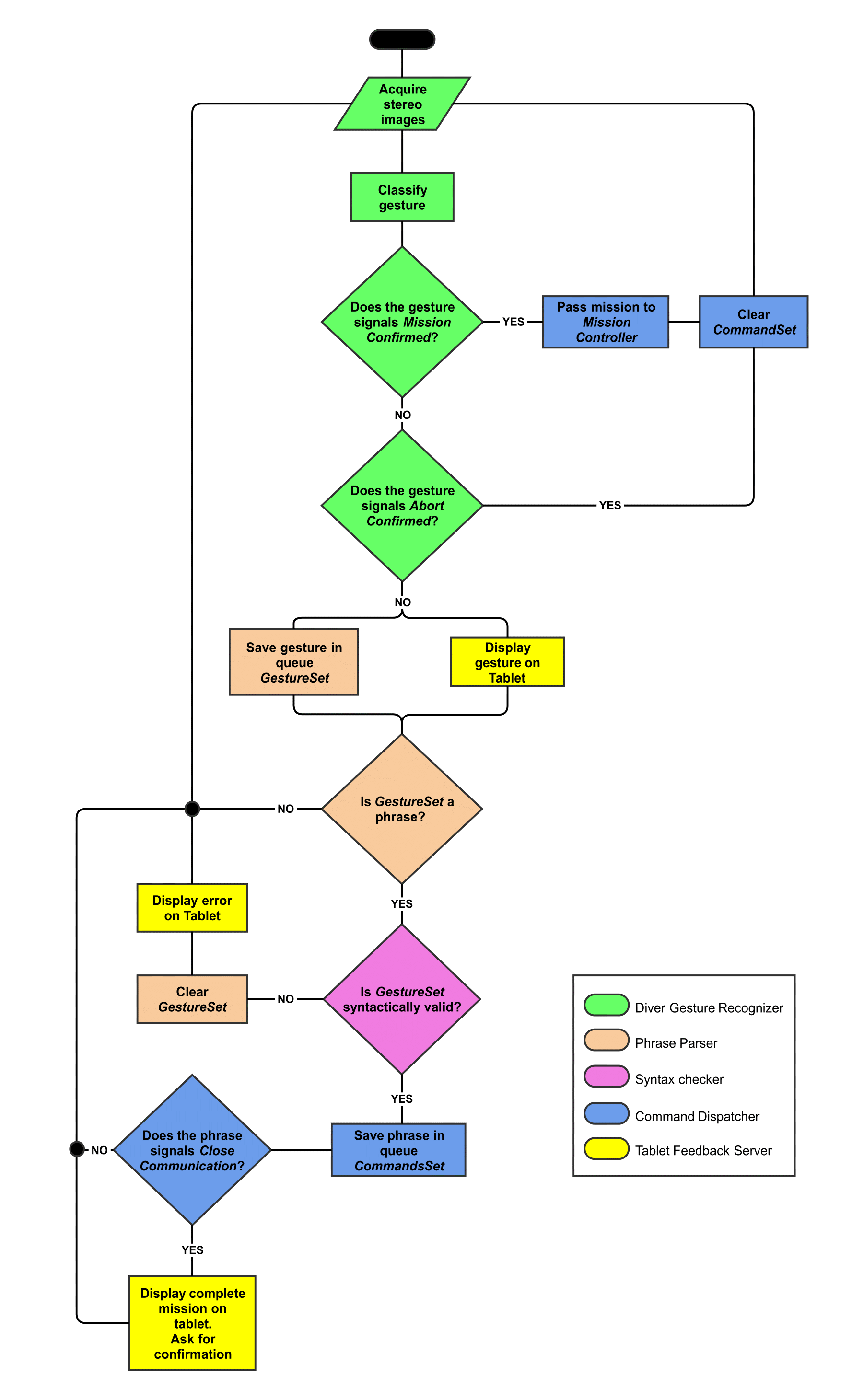

Fig. SO3.6. shows how the different modules communicate each other in the ROS environment (via topics). The purpose of each software module or ROS node is the following:

- Diver gesture classifier: Recognize diver gestures from stereo images. The first gesture to be recognized must be START COMMUNICATION; if any other gesture is performed before it will not be recognized.

- Phrase Parser: The code of each recognized gesture is sent to this module; the performed gestures between two START COMMUNICATION signals or a START COMM and an END COMM are saved as a string of codes and send to the Syntax checker module. Thus, the purpose of this node is to identify a sequence of gestures that stands for a phrase within the CADDIAN language.

- Syntax checker: CADDIAN phrases are received by this module and are evaluated to see if they are syntactically correct based on the CADDIAN rules. If valid, these phrases are sent to the Command Dispatcher module; otherwise, an alert is sent to the tablet to signal to the diver there was a mistake and the stored string of gestures are erased.

- Command dispatcher: The purpose of this module is to save valid CADDIAN phrases in a queue until an END COMM signal is received. It then sends these phrases or missions to the Mission controller whose functionality is described in the following sections.

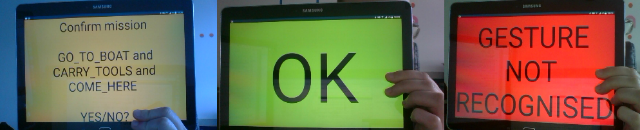

- Tablet Feedback: This module receives flags/signals from the other modules in order to give feedback to the diver during all the communication process. Every time a gesture is performed, the tablet displays what was recognized; in this way, the diver can know if his/her command was misclassified or not and restart the process. Likewise, when a phrase is not syntactically correct, a message to the diver is sent. Finally, when an END COMM gesture is recognized, the tablet shows all the recognized phrases/mission until that moment and asks the diver to confirm or abort their execution. This ROS node prepares the topic to communicate with the tablet; the ROS topic is sent via Bluetooth from the vehicle computer to the tablet, and an application on the tablet interprets the topic to display it in an appropriate format.

Fig. SO3.7. State machine diagram of the gesture recognition and mission generation process. Each action is colour coded according to which ROS node/module performs it.

As part of the gesture-based underwater communication protocol between the diver and the BUDDY vehicle, a feedback system has been developed that provides the diver with immediate visual information pertaining to the robot’s reception and interpretation of the gesture command in progress, for both simple and complex gestures.

Fig. SO3.8. Example of feedback messages displayed on BUDDY tablet during gesture recognition

This subobjective is considered to be completed. The developed system has been tested and has been used successfully during the final validation trials.

SO3.b. Development of an online cognitive mission replanner.

The cognitive mission replanner based on Petri nets has been developed and tested. The CADDY mission control system is a modular framework designed and developed with the aim of managing the state tracking, task activations and reference generation that fulfils the requirements in order to support the diver operations. As depicted in Fig. SO3.9, the mission controller acts as a cognitive planning and supervision system taking as input the decoded gesture sequence executed by the diver, in turn generating proper task activation actions in such a way to trigger the required capabilities needed to provide the requested support.

Fig.SO3.9. Main modules of high-level CADDY functionalities

The main goal of the mission control module is to abstract the robotic capabilities at logical level and manage the activation of the real robotic primitives, while at the same time resolving possible conflicts that may arise when selecting the robotic capabilities to be triggered.

In the CADDY logical framework, three classes of executable units have been identified (already described in Objective SO2.c):

- functional primitives;

- high-level logical tasks;

- low-level robotic tasks.

The primitives are linked to the support action that the diver can require by means of the gesture-based language: once a gesture or a complex sequence of gestures is recognized and validated, it is sent to the mission controller that will activate the proper primitive to start the support operation.

Each primitives activates as set of high-level tasks that represent the logical functionalities required to fulfil the required operation.

The high-level logical tasks activate in turn a set of robotic tasks that enable and execute the physical operations on the real robot devoted to the support of the diver operations.

For the automatic selection, activation and inter-task conflict management, a Petri net based execution control system has been developed. The system is configured by means of a set of configuration files that specify, on one side, the capabilities of the robot in terms of autonomous tasks and, on the other side, the set of high level functionalities that the CADDY system has to provide for the diver support. A real-time Petri net engine models the logical interconnections among the tasks and primitives and, depending on the specific actions commanded by the diver, automatically handle the activation/deactivation of the proper task sets.

|

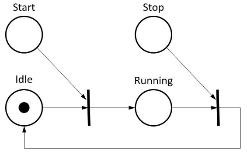

Fig. SO3.10. Basic task representation in the Petri Net based mission controller

|

Each mission controller related task is logically represented by a simple Petri Net which define the state of activation of the task. As shown in Fig. SO3.10, two places represent the two state of the task: idle and running. Two other places represent the start and stop events, while two transitions define the task net evolution rules. The idle place is initially marked, representing an initial condition of deactivated task.

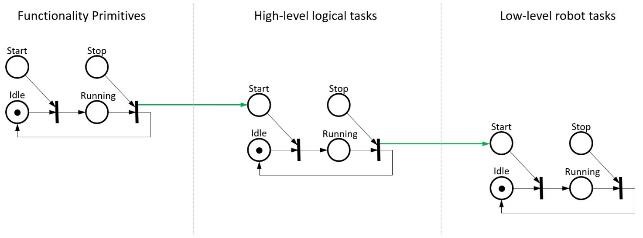

The Petri Nets representing the tasks are then connected through suitable arcs joining the running state of a logical layer to the start place of the following layer, as depicted in Fig. SO3.11. In such a way a cascade activation of the task chain is carried out.

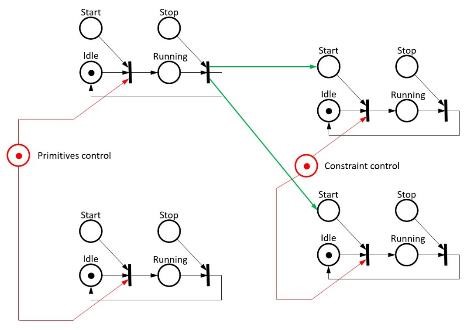

With such a proposed scheme, two main issues remain to be handled: the mutual exclusive activation of the functionality primitives and the conflict management in high- and low-level tasks execution. For the issue of primitives mutual exclusion it is sufficient to insert an additional control place at primitives level, in such a way that only one single primitive can switch to running state, as shown in Fig. SO3.b.4.

Fig. SO3.11. Cascade-layer tasks connection

The inter-task conflict issue is raised from the fact that different tasks can generate output for the same output variable. To resolve the conflicts, a constraint place is added between two or more conflict tasks whenever a same output variable is shared among those tasks (see Fig. SO3.12). The presence of this new constraint place allows the mutual exclusion of the conflicting task execution and providing a consistent state of the mission net.

Fig. SO3.12. Mutual exclusive and conflict-free mission control Petri Net

This subobjective is considered completed. The developed algorithms have been tested and validated during the Software Integration Workshop (held in May 2016, Zagreb) and have been extensively tested during the final validation trials (October 2016, Biograd na Moru).